System Haptics: 7 Revolutionary Advances You Can’t Ignore

Imagine feeling the texture of a fabric through your phone or sensing a virtual heartbeat in a game. That’s the magic of system haptics—where touch meets technology in the most immersive way possible.

What Are System Haptics and How Do They Work?

System haptics refers to the integrated technology that delivers tactile feedback through vibrations, forces, and motions in electronic devices. It’s not just about a simple buzz; it’s a sophisticated communication channel between the device and the user’s sense of touch. This technology is embedded in smartphones, gaming controllers, wearables, and even medical simulators, transforming how we interact with digital interfaces.

The Science Behind Tactile Feedback

At its core, system haptics relies on actuators—tiny motors that generate physical sensations. These actuators respond to software commands, producing vibrations of varying intensity, frequency, and duration. The human skin, particularly on fingertips and palms, is highly sensitive to these micro-movements, allowing users to perceive nuanced feedback like clicks, taps, or even simulated textures.

- Electrostatic actuators create surface friction changes for ‘sliding’ sensations.

- Linear resonant actuators (LRAs) produce precise, directional vibrations.

- Eccentric rotating mass (ERM) motors generate broad, less precise vibrations.

According to ScienceDirect, the effectiveness of haptic feedback depends on the psychophysical response of users, meaning the sensation must be calibrated to human perception thresholds.

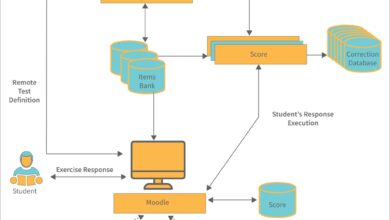

Components of a Modern Haptic System

A complete system haptics setup includes hardware, firmware, and software layers working in harmony. The hardware consists of actuators and sensors, while firmware controls timing and intensity. The software layer interprets user actions and triggers appropriate haptic responses.

- Actuators: Convert electrical signals into mechanical motion.

- Sensors: Detect user input (e.g., touch pressure, motion).

- Haptic Drivers: Microcontrollers that manage actuator behavior.

- APIs: Allow developers to integrate custom haptic patterns.

“Haptics is the missing link in multisensory interfaces—without touch, digital experiences remain flat and disconnected.” — Dr. Lynette Jones, MIT Senior Research Scientist

Evolution of System Haptics: From Buzz to Precision

The journey of system haptics began with rudimentary vibration alerts in pagers and early mobile phones. These used basic ERM motors that offered little control. But as technology advanced, so did the sophistication of haptic feedback, leading to today’s high-fidelity tactile experiences.

First-Generation Haptics: The Buzz Era

In the late 1990s and early 2000s, most mobile devices used ERM motors. These were effective for alerts but lacked precision. The vibration was often described as ‘jarring’ or ‘unrefined,’ limiting their use to binary notifications—on or off.

- Limited control over vibration intensity.

- High power consumption.

- Slow response time and long decay.

Despite limitations, this era laid the groundwork for user expectations around tactile feedback. As noted by IEEE, early haptics helped establish the concept of non-visual feedback in human-computer interaction.

Second-Generation: Rise of Linear Actuators

The introduction of Linear Resonant Actuators (LRAs) marked a turning point. LRAs use a magnetic coil to move a mass back and forth in a straight line, enabling faster, cleaner, and more controllable vibrations. Apple’s Taptic Engine, introduced in the iPhone 6S, is a prime example of LRA-based system haptics.

- Higher energy efficiency compared to ERMs.

- Sharper, more localized feedback.

- Support for complex waveforms and sequences.

This advancement allowed for contextual haptics—different vibrations for different actions, like a soft tap for a message and a stronger pulse for an alarm.

Third-Generation: Smart and Adaptive Haptics

Today’s system haptics are intelligent. They adapt based on user behavior, environment, and application context. For instance, a gaming controller might increase vibration intensity during intense gameplay, while a smartwatch reduces feedback in silent mode.

- Machine learning algorithms optimize haptic profiles.

- Integration with motion sensors for dynamic response.

- Customizable feedback via user settings.

Companies like Boroband are pioneering adaptive haptics that respond in real-time to user grip and movement, enhancing immersion in VR and AR environments.

Applications of System Haptics Across Industries

System haptics is no longer confined to consumer electronics. Its applications span multiple industries, revolutionizing how professionals and users interact with technology.

Smartphones and Wearables

In smartphones, system haptics enhance user experience by providing tactile confirmation for actions like typing, scrolling, or receiving notifications. The iPhone’s 3D Touch (now Haptic Touch) used precise vibrations to simulate button presses without mechanical switches.

- Simulates keyboard clicks on virtual keypads.

- Provides navigation cues in mapping apps.

- Enhances accessibility for visually impaired users.

Wearables like the Apple Watch use haptics for discreet alerts—tapping the wrist to signal a call or guiding users with directional pulses during navigation.

Gaming and Virtual Reality

Gaming is one of the most immersive domains for system haptics. Controllers from Sony’s DualSense to Valve’s Index Knuckles deliver dynamic feedback that mimics in-game actions—like the tension of a bowstring or the recoil of a gun.

- DualSense controller features adaptive triggers and haptic motors.

- VR gloves use haptics to simulate object weight and texture.

- Haptic suits provide full-body feedback for immersive training simulations.

According to a Gartner report, over 60% of next-gen gaming experiences will incorporate advanced haptics by 2026.

“Haptics transforms VR from visual illusion to embodied experience.” — Mark Bolas, Professor of Immersive Media

Medical Training and Rehabilitation

In healthcare, system haptics enables realistic surgical simulations. Trainees can feel the resistance of tissue or the slip of a scalpel, improving muscle memory and precision. Haptic feedback is also used in robotic surgery systems to give surgeons tactile cues from remote instruments.

- Simulators for laparoscopic and dental procedures.

- Haptic prosthetics that restore touch sensation.

- Rehabilitation devices that guide motor recovery through feedback.

A study published in IEEE Transactions on Haptics found that haptic-enabled training reduced surgical errors by up to 30% compared to visual-only methods.

Automotive and Driver Assistance

Modern vehicles use system haptics in steering wheels, seats, and pedals to alert drivers without distracting visuals. For example, a vibrating steering wheel can signal lane departure, while a pulsing seat might indicate a blind-spot hazard.

- Haptic feedback in autonomous driving alerts.

- Tactile cues for navigation systems.

- Integration with ADAS (Advanced Driver Assistance Systems).

BMW and Tesla have incorporated haptic pedals that resist acceleration in eco-mode, subtly encouraging fuel-efficient driving.

Key Players and Innovations in System Haptics

The system haptics landscape is shaped by a mix of tech giants, startups, and research institutions pushing the boundaries of tactile technology.

Apple and the Taptic Engine

Apple’s Taptic Engine is arguably the most refined implementation of system haptics in consumer devices. Introduced in 2015, it replaced traditional vibration motors with a linear actuator capable of delivering over 200 distinct haptic patterns.

- Used in iPhones, Apple Watch, and MacBook trackpads.

- Enables Haptic Touch, silent alarms, and accessibility features.

- Integrated with iOS APIs for developer customization.

Apple’s Human Interface Guidelines emphasize haptics as a core part of user experience design, encouraging developers to use feedback meaningfully rather than excessively.

Sony’s DualSense Controller

Sony’s PlayStation 5 DualSense controller redefined gaming haptics with two key features: adaptive triggers and advanced haptic feedback. Unlike traditional rumble, the DualSense can simulate varying levels of resistance and texture.

- Adaptive triggers can tighten to mimic drawing a bow.

- Haptic motors deliver nuanced sensations like rain or terrain changes.

- Supported by games like Returnal and God of War: Ragnarök.

Sony’s innovation has set a new benchmark, prompting competitors like Microsoft to explore similar technologies for future Xbox controllers.

Boroband and Wearable Haptics

Boroband is a rising star in the haptics space, focusing on wearable devices that deliver immersive tactile experiences. Their haptic armbands are used in VR, training, and entertainment to simulate touch across the body.

- Uses multiple actuators for spatial feedback.

- Programmable via SDK for custom experiences.

- Used in military and medical training simulations.

Their technology bridges the gap between audiovisual immersion and physical sensation, making virtual environments feel more real.

Technical Challenges in System Haptics Development

Despite rapid progress, system haptics face several technical and design challenges that limit widespread adoption and effectiveness.

Power Consumption and Battery Life

Haptic actuators, especially high-performance LRAs, can be power-hungry. Continuous use drains batteries quickly, a major concern for mobile and wearable devices.

- ERM motors are less efficient but cheaper.

- LRAs offer better performance but require optimized drivers.

- Battery trade-offs often limit haptic intensity or duration.

Engineers are exploring piezoelectric actuators, which consume less power and respond faster, as a potential solution.

Latency and Synchronization

For haptics to feel natural, feedback must be synchronized with visual and auditory cues. Even a 50ms delay can break immersion, especially in VR and gaming.

- Software processing delays can lag haptic triggers.

- Hardware response time varies by actuator type.

- Network latency affects cloud-based haptic streaming.

Real-time operating systems (RTOS) and low-latency communication protocols like Bluetooth LE are being adopted to minimize delays.

Standardization and Fragmentation

Unlike audio or video, there’s no universal standard for haptic content. Each manufacturer uses proprietary formats, making it hard for developers to create cross-platform experiences.

- Apple uses its own haptic engine API.

- Android supports multiple vendors with varying capabilities.

- No common file format for haptic patterns (like MP3 for audio).

Organizations like the Web3D Consortium are working on H-Anim and X3D standards to enable interoperable haptic content.

Future Trends in System Haptics

The future of system haptics is not just about better vibrations—it’s about creating a seamless, multi-sensory digital experience that feels indistinguishable from reality.

Haptic Gloves and Full-Body Suits

Next-generation VR will rely on haptic gloves and suits to deliver realistic touch feedback. Companies like HaptX and bHaptics are developing wearable systems with microfluidic actuators and force feedback.

- HaptX Gloves simulate texture, temperature, and grip force.

- bHaptics suits provide torso, arm, and hand feedback.

- Used in enterprise training, gaming, and teleoperation.

These systems are still expensive and bulky, but miniaturization and cost reduction are expected within the next 5 years.

Air-Based Haptics and Ultrasonic Feedback

Air-based haptics use ultrasound waves to create tactile sensations in mid-air. Users can ‘feel’ virtual buttons or objects without wearing any device.

- Ultrahaptics (now Ultraleap) pioneered this technology.

- Used in automotive dashboards and public kiosks.

- Enables touchless interaction in sterile environments (e.g., hospitals).

While still in early adoption, this technology could revolutionize interfaces where hygiene or mobility is a concern.

“The ultimate goal is to make the digital world feel as tangible as the physical one.” — Tom Carter, CTO of Ultraleap

AI-Driven Personalized Haptics

Artificial intelligence is beginning to personalize haptic feedback based on user preferences, physiology, and context. Machine learning models can analyze how a user responds to different vibrations and adjust patterns accordingly.

- Adapts haptic intensity based on skin sensitivity.

- Learns user habits to optimize feedback timing.

- Enhances accessibility for users with sensory impairments.

Google’s Project Soli and Apple’s on-device AI are exploring ways to make haptics more intuitive and less intrusive.

System Haptics and User Experience Design

Integrating system haptics into user experience (UX) design requires more than just adding vibrations—it demands intentionality, subtlety, and empathy.

Principles of Effective Haptic Design

Great haptic design is invisible when done right. It should enhance, not distract. Designers follow key principles to ensure feedback is meaningful and context-aware.

- Relevance: Feedback should correspond to a user action or system state.

- Consistency: Similar actions should produce similar haptic responses.

- Subtlety: Avoid overuse that leads to sensory fatigue.

- Accessibility: Provide alternatives for users who may not feel vibrations.

Apple’s Human Interface Guidelines recommend using haptics for confirmation, navigation, and alerts—but never as the sole feedback mechanism.

Common Haptic Design Mistakes

Poorly implemented haptics can frustrate users. Common pitfalls include excessive vibration, inconsistent patterns, and lack of user control.

- Using haptics for every minor event (e.g., every keystroke).

- Ignoring user preferences or accessibility settings.

- Failing to test across different devices and skin types.

A Nielsen Norman Group study found that 78% of users disable haptics when they feel intrusive or unpredictable.

Best Practices for Developers

Developers integrating system haptics should follow platform-specific guidelines and test extensively.

- Use native APIs (e.g., Core Haptics on iOS, Vibration API on Android).

- Allow users to customize or disable haptics.

- Test on real devices, not emulators.

- Document haptic patterns for team consistency.

Tools like Haply and Haptician are emerging to help designers prototype and test haptic experiences before coding.

What are system haptics?

System haptics is the technology that provides tactile feedback in electronic devices through vibrations, forces, and motions. It enhances user interaction by simulating touch in digital environments.

How do system haptics improve user experience?

They provide immediate, intuitive feedback for actions like typing, scrolling, or receiving alerts. This reduces cognitive load, improves accessibility, and increases immersion in apps and games.

Which devices use system haptics?

Smartphones (e.g., iPhone), wearables (e.g., Apple Watch), gaming controllers (e.g., DualSense), VR systems, and medical simulators all use system haptics to enhance functionality and realism.

Are haptics bad for battery life?

They can be, especially high-intensity or continuous haptics. However, modern actuators like LRAs and optimized drivers help minimize power consumption.

Can haptics be personalized?

Yes, emerging AI-driven systems can adapt haptic feedback based on user preferences, sensitivity, and usage patterns, offering a more tailored experience.

System haptics has evolved from simple buzzes to sophisticated, context-aware tactile feedback that enriches digital interactions. From smartphones to surgical simulators, this technology is reshaping how we perceive and interact with machines. As innovation continues, we’re moving toward a future where the line between digital and physical touch blurs—ushering in a new era of immersive, intuitive, and intelligent interfaces.

Further Reading: