System Failure: 7 Shocking Causes and How to Prevent Them

Ever felt like everything’s going smoothly—until it suddenly isn’t? That moment when lights flicker, servers crash, or traffic grinds to a halt? That’s system failure in action. It’s not just a glitch—it’s a breakdown with real-world consequences.

What Exactly Is a System Failure?

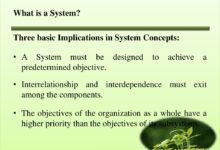

A system failure occurs when a complex network—be it technological, organizational, or biological—stops functioning as intended. This can range from a single server crashing to entire cities losing power. The key is that multiple components, designed to work together, fail to do so, often with cascading effects.

Defining System Failure in Modern Contexts

In engineering and IT, a system failure is formally defined as the inability of a system to perform its required functions within specified limits. According to the International Organization for Standardization (ISO), this includes both complete shutdowns and partial malfunctions that degrade performance.

- Failures can be sudden or gradual.

- They may affect hardware, software, human processes, or a combination.

- Examples include power grid collapses, software bugs in banking apps, and supply chain disruptions.

Types of System Failures

Not all system failures are created equal. They fall into several categories based on origin, scope, and impact:

- Hardware Failure: Physical components like servers, routers, or circuit breakers stop working.

- Software Failure: Bugs, memory leaks, or flawed algorithms cause crashes or incorrect outputs.

- Human Error: Mistakes in operation, configuration, or decision-making trigger failures.

- Environmental Failure: Natural disasters, extreme weather, or electromagnetic interference disrupt operations.

- Cascading Failure: One failure triggers a chain reaction across interconnected systems.

“A system is only as strong as its weakest link—especially when that link is connected to hundreds of others.” — Dr. Elena Torres, Systems Resilience Researcher

Historical System Failures That Changed the World

Some system failures are so significant they alter the course of technology, policy, or public awareness. These events serve as cautionary tales and catalysts for innovation.

The Northeast Blackout of 2003

On August 14, 2003, a software bug in an energy management system failed to alert operators to a growing overload in Ohio. Within minutes, the entire Northeastern United States and parts of Canada lost power. Over 50 million people were affected, and the economic impact exceeded $6 billion.

- The root cause was a combination of software failure and human oversight.

- It exposed weaknesses in grid monitoring and interdependence between regional utilities.

- As a result, North American Electric Reliability Corporation (NERC) implemented stricter reliability standards. Learn more about NERC’s post-blackout reforms.

The Therac-25 Radiation Therapy Machine Disaster

In the mid-1980s, the Therac-25, a medical device designed to deliver radiation therapy, malfunctioned due to a software race condition. At least six patients received massive overdoses of radiation, leading to severe injuries and deaths.

- The failure stemmed from poor software design and lack of hardware safety interlocks.

- It became a landmark case in software engineering ethics and safety-critical systems.

- The incident is now taught in computer science and medical engineering programs worldwide.

“The Therac-25 tragedy wasn’t just a technical failure—it was a failure of culture, oversight, and accountability.” — Nancy Leveson, MIT Professor of Aeronautics and Astronautics

Common Causes of System Failure

Understanding the root causes of system failure is the first step toward prevention. While each incident has unique circumstances, certain patterns recur across industries.

Poor Design and Architecture

Many system failures originate in the design phase. Systems that lack redundancy, scalability, or clear failure modes are inherently vulnerable.

- Single points of failure (SPOFs) are a major risk—when one component fails, the whole system collapses.

- Overly complex architectures make troubleshooting difficult and increase the likelihood of unforeseen interactions.

- Example: The 2010 Flash Crash in the U.S. stock market was partly due to high-frequency trading algorithms with no circuit breakers.

Inadequate Testing and Monitoring

Even well-designed systems can fail if they aren’t rigorously tested under real-world conditions. Monitoring gaps mean problems go unnoticed until it’s too late.

- Load testing, stress testing, and chaos engineering (like Netflix’s Chaos Monkey) help identify weaknesses.

- Real-time monitoring tools like Prometheus or Datadog can detect anomalies before they escalate.

- Lack of logging and alerting was a factor in the 2017 British Airways IT meltdown that stranded 75,000 passengers.

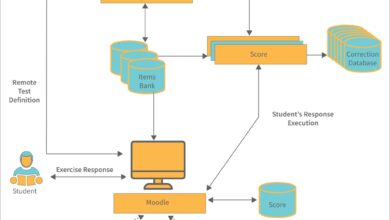

System Failure in Technology and IT Infrastructure

In the digital age, system failure often means IT infrastructure collapse. From cloud outages to data breaches, the stakes are higher than ever.

Cloud Service Outages

Major cloud providers like AWS, Google Cloud, and Microsoft Azure have experienced significant outages. In December 2021, AWS’s us-east-1 region went down, affecting thousands of websites and services including Slack, Netflix, and Robinhood.

- The cause was a networking configuration error during routine maintenance.

- Despite redundancy, the failure propagated due to interdependencies.

- Businesses learned the hard way about the risks of over-reliance on a single cloud provider. AWS Status Dashboard now provides real-time updates.

Data Center Failures

Data centers are the backbone of the internet, but they’re vulnerable to power loss, cooling failures, and physical damage.

- In 2019, a fire at a data center in Strasbourg, France, knocked out millions of websites hosted by OVHcloud.

- Redundant power supplies, fire suppression systems, and geographic distribution are critical for resilience.

- Best practices now include multi-region backups and automated failover systems.

System Failure in Critical Infrastructure

When systems fail in sectors like energy, transportation, or healthcare, the consequences can be life-threatening. These are not just technical issues—they’re public safety concerns.

Power Grid Failures

Modern power grids are vast, interconnected networks. A failure in one area can cascade across regions.

- The 2011 Southwest Blackout affected San Diego, Arizona, and parts of Mexico due to a single operator’s error in Arizona.

- Renewable energy integration adds complexity, as solar and wind are intermittent and require smart grid technologies.

- Smart meters and AI-driven load balancing are being deployed to improve grid stability.

Transportation System Collapse

From air traffic control systems to subway signaling, transportation relies on precise coordination. Failures can lead to delays, accidents, or fatalities.

- In 2018, a software update glitch grounded hundreds of flights in the U.S. due to a failure in the FAA’s Notice to Airmen (NOTAM) system.

- London’s Underground has faced repeated signal failures due to aging infrastructure.

- Autonomous vehicles introduce new risks, such as sensor misinterpretation or AI decision errors.

Human Factors in System Failure

Despite advances in automation, humans remain a critical part of most systems. Human error, poor training, or organizational culture can all contribute to system failure.

The Role of Human Error

Studies suggest that up to 90% of IT incidents involve some degree of human error. This includes misconfigurations, accidental deletions, and poor judgment under pressure.

- The 2017 Amazon S3 outage was caused by an engineer typing a command incorrectly during debugging.

- Simple safeguards like confirmation prompts, access controls, and audit logs can prevent such mistakes.

- Blame-free post-mortems encourage transparency and learning, rather than punishment.

Organizational and Cultural Failures

Sometimes, the problem isn’t individuals—it’s the culture. Companies that prioritize speed over safety, or discourage dissent, create environments where failures are more likely.

- NASA’s Challenger disaster in 1986 was not just an engineering failure but a cultural one—engineers’ warnings were ignored.

- Google’s Site Reliability Engineering (SRE) model emphasizes psychological safety and blameless post-mortems.

- Leadership must foster a culture where reporting near-misses is encouraged, not punished.

Preventing System Failure: Strategies and Best Practices

While no system can be 100% failure-proof, many failures are preventable with the right strategies. Resilience, redundancy, and continuous improvement are key.

Implementing Redundancy and Failover Systems

Redundancy means having backup components that can take over if the primary ones fail.

- In aviation, critical systems like flight controls have triple redundancy.

- In IT, database replication and load balancers ensure uptime during server failures.

- However, redundancy alone isn’t enough—failover mechanisms must be tested regularly.

Adopting Resilience Engineering Principles

Resilience engineering focuses on a system’s ability to adapt and recover from disruptions, rather than just preventing them.

- Instead of asking “How do we prevent failure?”, resilience engineering asks “How do we respond when failure happens?”

- Techniques include real-time monitoring, automated recovery scripts, and incident response drills.

- The U.S. Department of Homeland Security uses resilience frameworks for critical infrastructure protection. Explore DHS resilience guidelines.

The Future of System Failure: AI, Complexity, and Unknown Risks

As systems become more complex and interconnected, new types of system failure are emerging. Artificial intelligence, quantum computing, and global interdependence introduce both opportunities and vulnerabilities.

AI and Autonomous Systems

AI-driven systems can make decisions faster than humans, but they can also fail in unpredictable ways.

- In 2018, an autonomous Uber vehicle struck and killed a pedestrian in Arizona due to sensor misclassification.

- AI models can suffer from “silent failures”—they keep running but produce incorrect results.

- Explainable AI (XAI) and continuous validation are essential to build trust and safety.

The Growing Complexity of Interconnected Systems

The Internet of Things (IoT), smart cities, and global supply chains create networks so complex that no single person fully understands them.

- A failure in a shipping container’s GPS system could disrupt an entire logistics network.

- Cyber-physical systems blur the line between digital and physical, increasing attack surfaces.

- System-of-systems engineering is emerging as a discipline to manage this complexity.

“We’re building systems we can’t fully comprehend. The next major system failure might not be a bug—it might be an emergent behavior we never anticipated.” — Dr. Rajiv Mehta, Complex Systems Analyst

What is a system failure?

A system failure occurs when a network of interconnected components stops functioning as intended, leading to degraded or complete loss of service. It can affect technology, infrastructure, organizations, or biological systems.

What are the most common causes of system failure?

The most common causes include poor design, software bugs, hardware malfunctions, human error, inadequate testing, and cascading failures due to interdependencies.

Can system failures be prevented?

While not all failures can be prevented, many can be mitigated through redundancy, rigorous testing, monitoring, resilience engineering, and fostering a safety-conscious organizational culture.

What was the worst system failure in history?

One of the worst was the 2003 Northeast Blackout, affecting 50 million people. Others include the Therac-25 radiation overdoses and the 2017 British Airways IT collapse.

How do companies recover from a system failure?

Recovery involves incident response, root cause analysis, system restoration, communication with stakeholders, and implementing changes to prevent recurrence. Post-mortem reviews are critical.

System failure is not just a technical issue—it’s a multifaceted challenge that spans engineering, human behavior, and organizational culture. From the Therac-25 tragedy to modern cloud outages, history shows that failures often stem from a combination of factors. The key to resilience lies in anticipating risks, building redundancy, and fostering a culture of learning. As systems grow more complex, especially with AI and IoT, the need for robust design and adaptive responses becomes even more urgent. The goal isn’t to eliminate failure entirely—that’s impossible—but to minimize its impact and recover quickly when it happens.

Further Reading: